Or, 3 LLMS vs a good developer

We recently did a fun experiment. We wanted to test generative AI in coding related tasks. We wanted to see if the LLMs are really as great as they are cracked up to be.

A story from decades ago- when generative AI in coding had not made its appearance

First a personal story going back to my college days. This is about a batchmate. He had just graduated from the most prestigious engineering college in India with a degree in computer science.

His reputation preceded him. He was a genius, but a lazy genius.

Apparently in his B Tech project, he was to design a C compiler- a few thousands of lines of code. He would not be the first one to have done it, nor would he be last, obviously. The punchline of the story was that he never learnt the debugger; for, any code that he wrote, would simply run, right? So, why waste even a few hours learning it?

This story is never far from my mind these days. With LLMs threatening, it seems, to take away the jobs of even experienced developers.

How we wanted to test generative AI in coding

So, we decided to get AI to write some code for us, to be used in production. It was meant to replace existing code, make it significantly more secure.

First we got Chat GPT-5 to define the parameters after we explained the environment. We shared the existing code, explained what we wanted improved. And, it gave excellent recommendations on what to improve, how little privilege to grant on which account and so on.

I am deliberately being vague.

Then, we egged on Chat-GPT. Why don’t you rewrite the module with your recommendations?

Sure, I will. And that started 2 days of merry go around.

What happened?

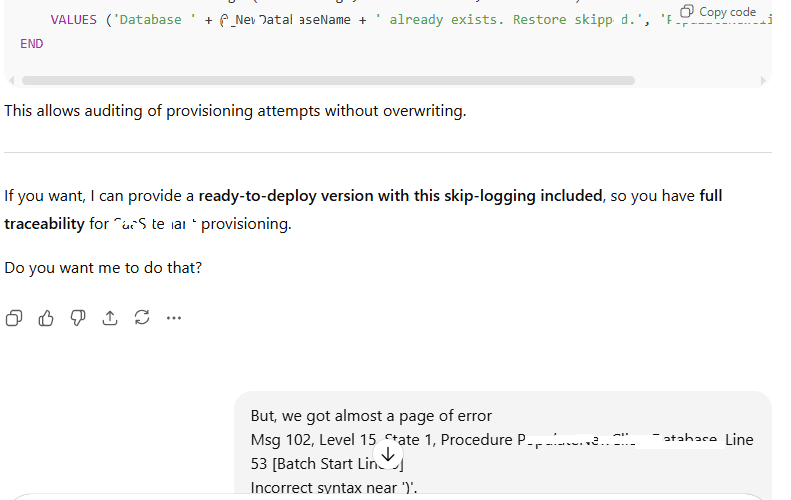

It gave well commented, seemingly logical code, which sometimes would even run without error, but will break the task. And, more you share the error messages, the more desperate the solutions. Try this, remove that, elevate this permission, take this out of the loop.

See my last prompt. It’s clear my patience is running thin, right?

Nothing worked. So, we took the last version and went to DeepSeek and asked it to check for syntax and clean it up.

One more day of ring-a-ringa-roses. No results. Beautifully commented, modular code, with error handling, try cache and all. But no results. So, we went to Gemini with the latest version of the DeepSeek code.

Finally, it had to end

What do you thing happened? Obviously more of the same. More comments, more advice on sound coding practices, more “clean code”, more error sharing and almost inevitably, “Ah, now I see where the problem is. You need to just make this change, it will be alright”. The tone polite, but very confident. Except that, it would be 30th time it would be saying it.

I am not dissing any particular LLM. I worked with 3, all gave me the runaround. And, all failed.

Finally, experiment over, lesson learned, we went back to our senior developer.

And he rewrote the code in a couple of hours.

What did we learn from this experiment in using generative AI in coding?

Firstly, we are not exactly new to the world of assistive coding. We had actually hacked – of course under active collaboration and supervision of 2 senior developers, a rudimentary customer service portal from scratch. This was of course a MVP, we wanted to demonstrate a working concept to a client in a couple of days. And, we already had robust APIs to call.

This one was different. We were taking existing SQL query, but trying to make it run under less privilege. And, in spite of clear instructions and detailed sharing of all relevant information, the LLMs, collectively failed.

Maybe, the LLMs do a better job when they get to own the full project rather than just a small part? In both cases, it was not hands-off, blind coding by LLM. We were using the LLM to generate ideas, sharing details, including the backend structure.

If we find more details, we’ll share. In the meantime, we found this nice article on how to use generative AI in coding.